Process/Methodology

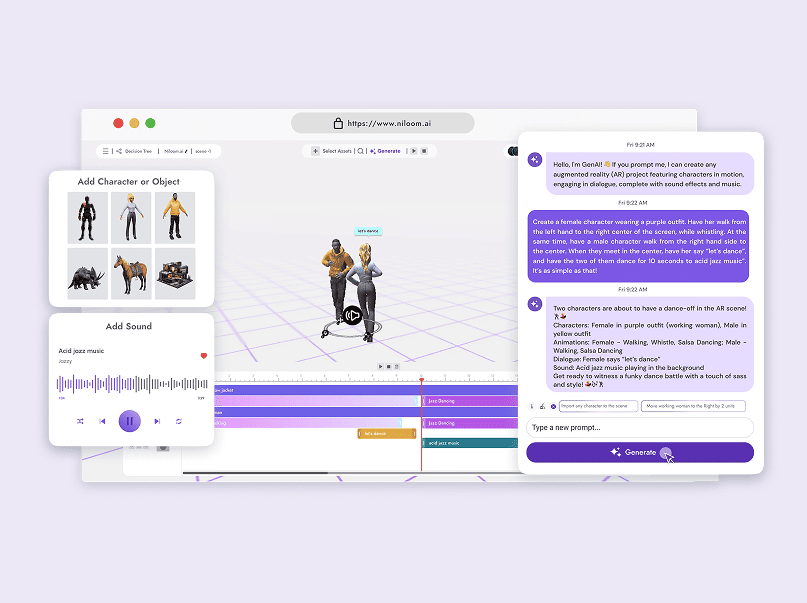

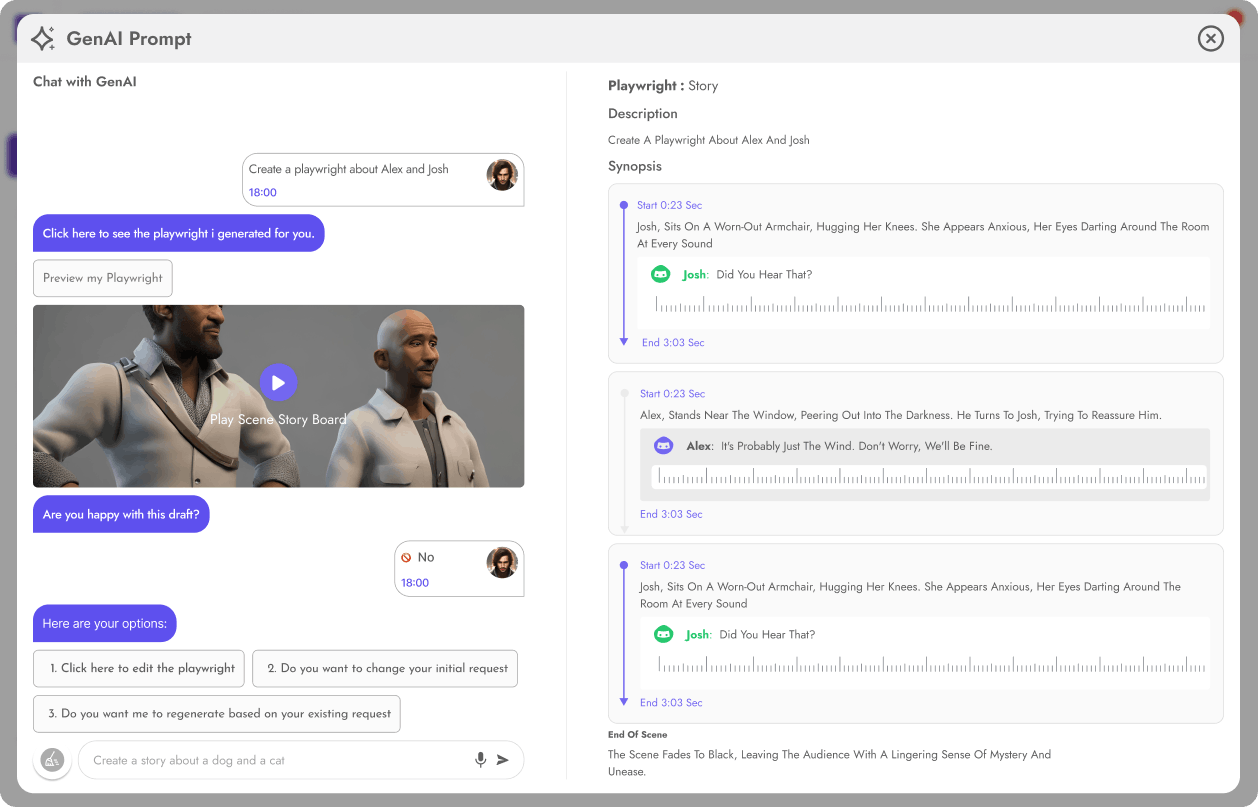

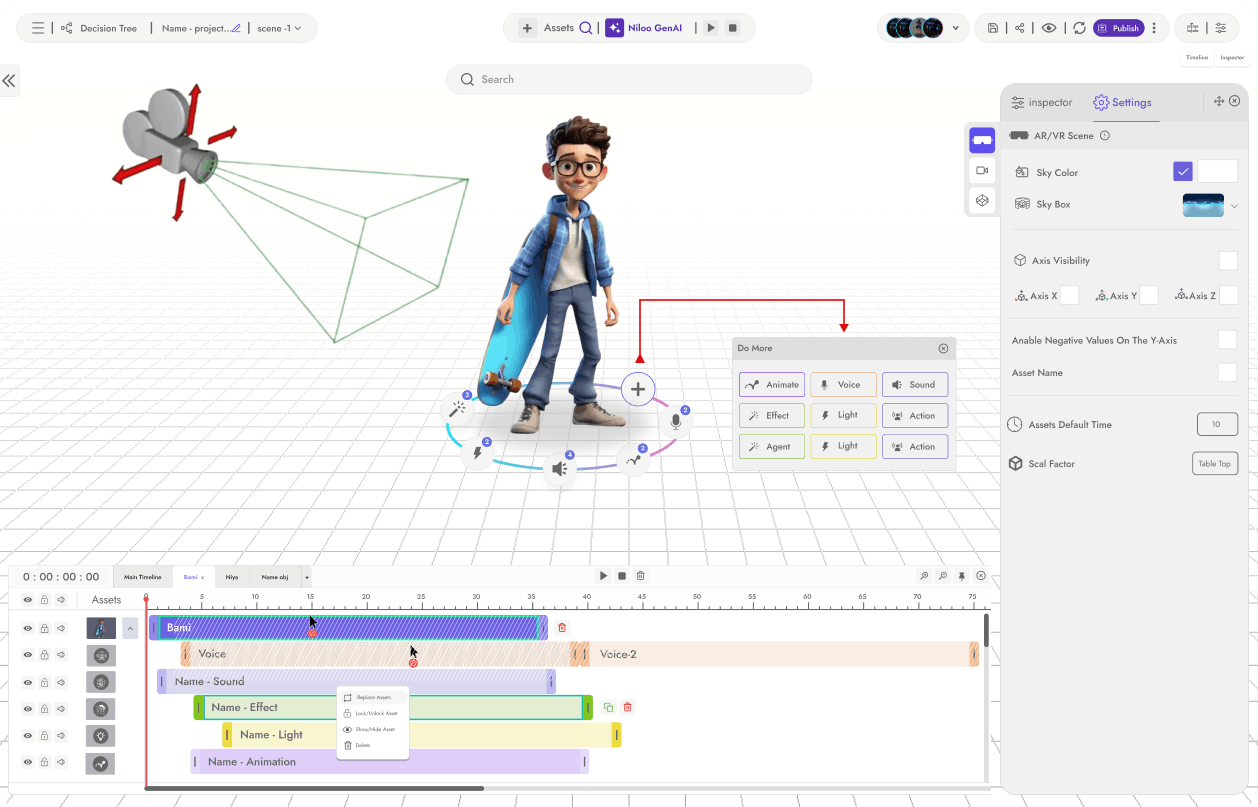

I initiated the design process through collaborative ideation workshops, working closely with the CEO, engineers, AI specialists, and the creative team to define the UX vision of our generative AI platform. Together, I mapped the end-to-end journey of a system that transitions simple text prompts into fully immersive VR/AR scenes. customizable either manually or through our AI agent, NilooAI.

I moved quickly into low-fidelity explorations, producing rapid sketches to experiment with prompt flows, asset libraries, and real-time preview integrations. These early concepts helped us clarify how users would move from imagination to spatial output with minimal friction.

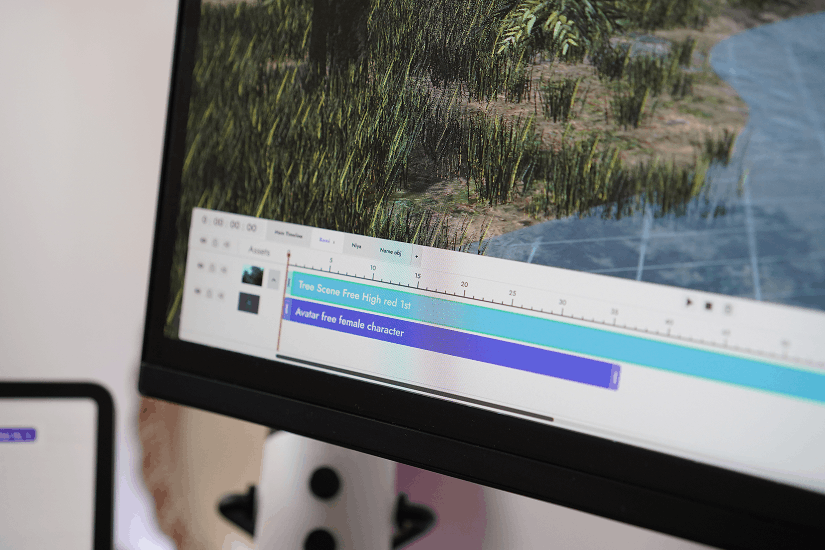

From there, I developed mid-fidelity clickable prototypes to validate the core journey: prompt → generate → edit → publish. These prototypes allowed us to test interaction logic, refine the editing experience, and ensure that AI-powered generation felt intuitive rather than technical.

The process was highly iterative, with frequent cross-functional reviews and early AI feature integration. particularly around handling ambiguous prompts through contextual suggestions, smart examples, and adaptive guidance. Throughout, I placed strong emphasis on responsive browser design and intuitive spatial metaphors, ensuring the experience felt both powerful and accessible across devices.

.png)

.png)

.png)

.png)

.png)

.png)